The Theoretical Concept That You Need To Learn Before Implementing a Convolution of Neural Network

Table of contents

Introduction

Hello my amazing reader , We all know that Artificial intelligence still going strong this last decates , Deep Learning and specifically covolution neural network in short convnet.

This subject that we will treat in this article in the reason is the most famous and popular architecture used by the developer.

Basic Neural Network Architecture

CNN is a deep neural network it works in the same our visuel cortex works and recognizes images .

To get this model from scratch ,We start by presenting its basic architecture

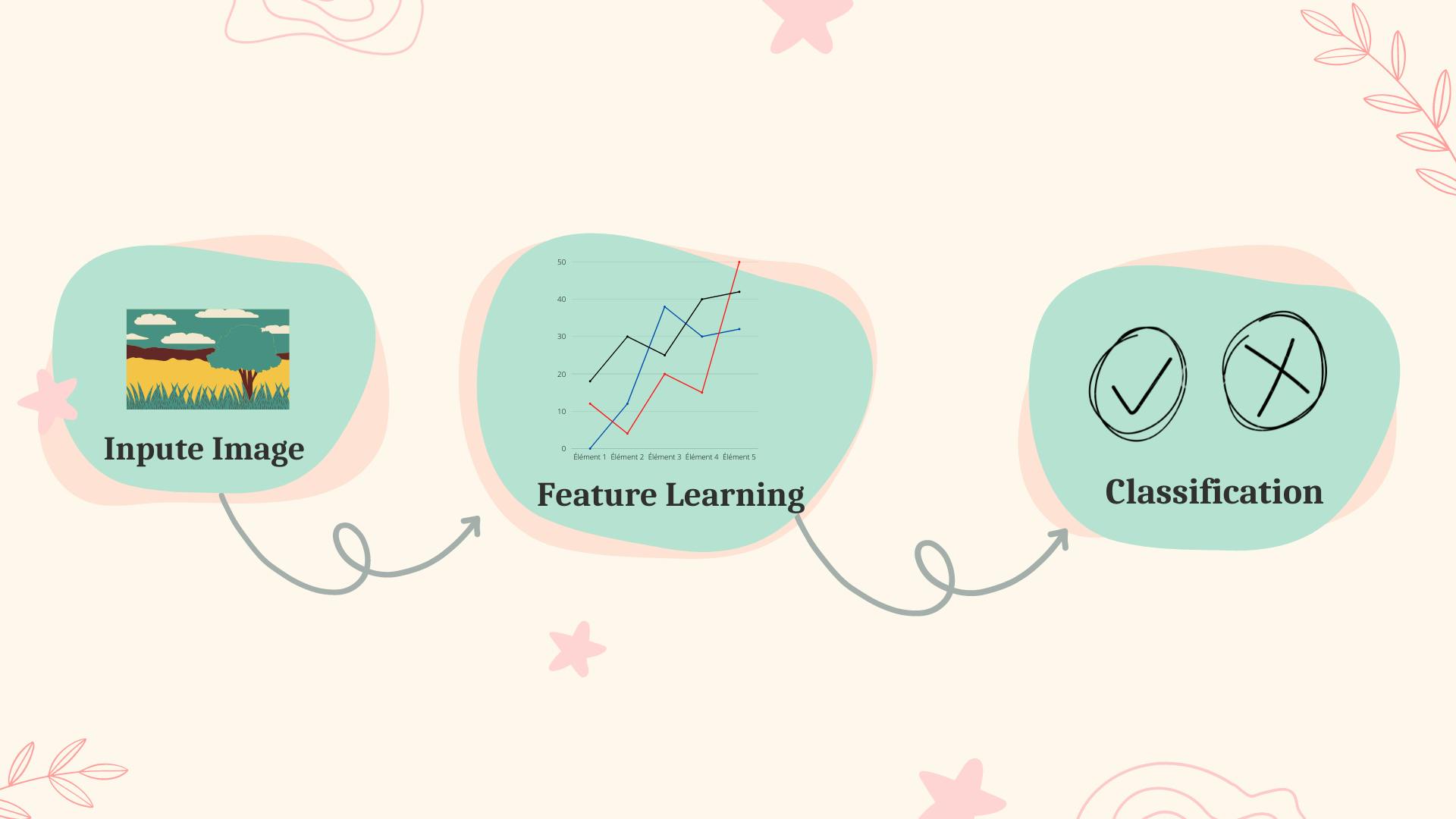

As you see in the picture , We can devide the entire architecture into two broad section

feature learning and classification .

So at the first the input image enters into the feature extraction network Then the extracted feature signals enter the clssification neural network to generate the output

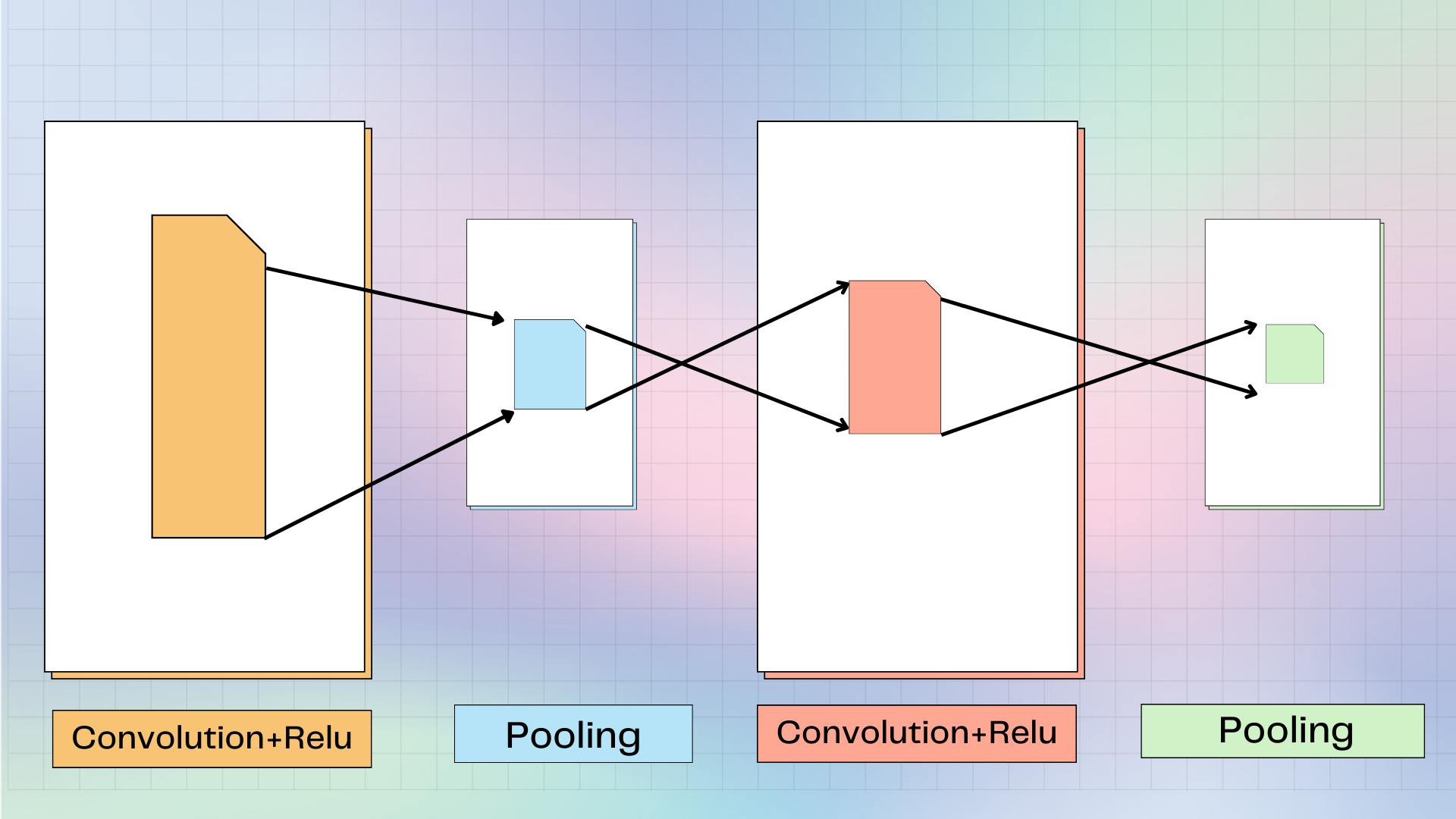

The feature learning is based on :

The piles of convolution layer and pooling layer pairs

The convolution layer converts the images using the convolution operation which is a collection of digital filters.

The pooling layer combines the neighboring pixels into a signale pixel .

It means that the pooling layer reduces the dimension of the image .

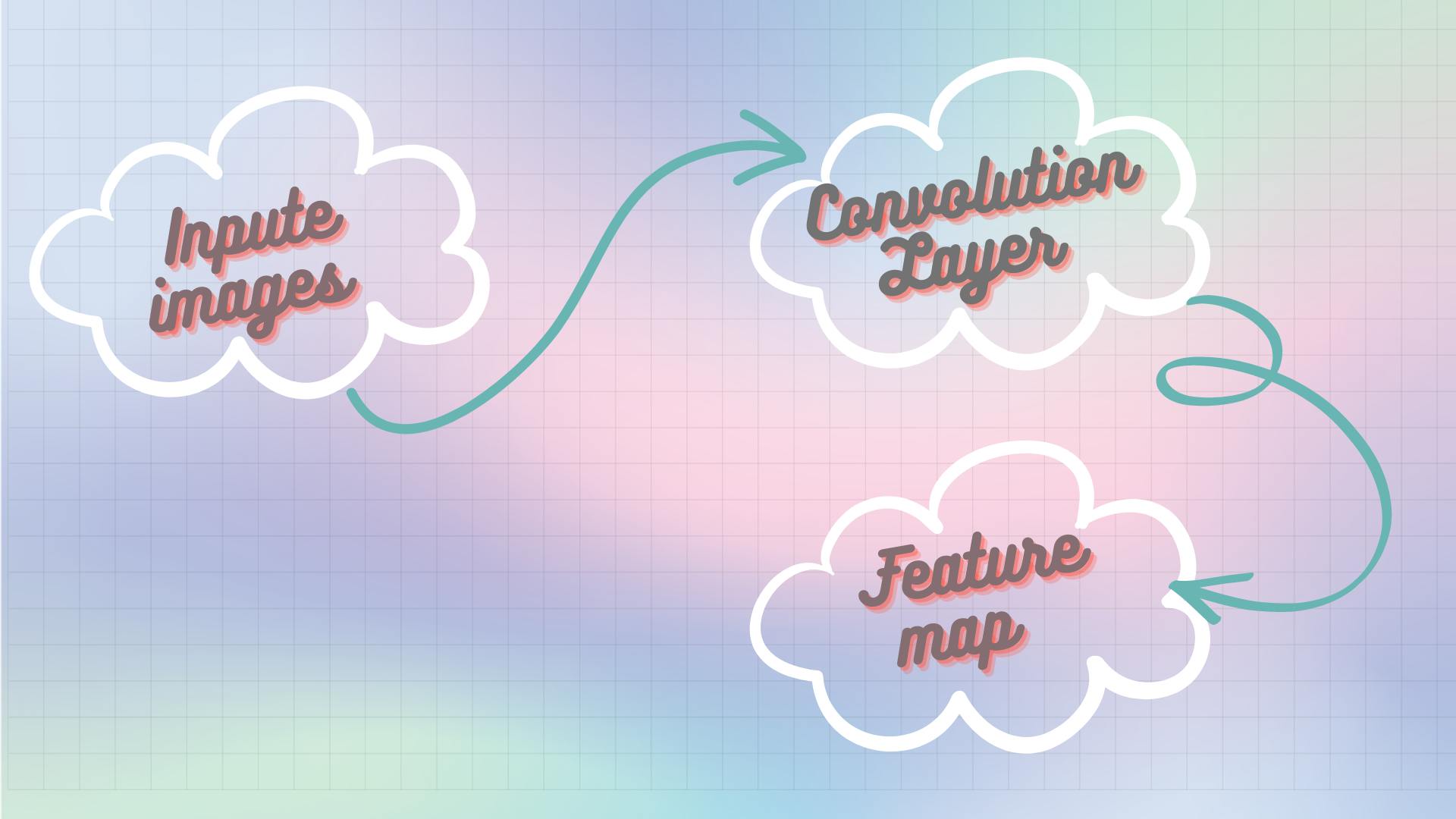

let's go deeper to understand the convolution layer

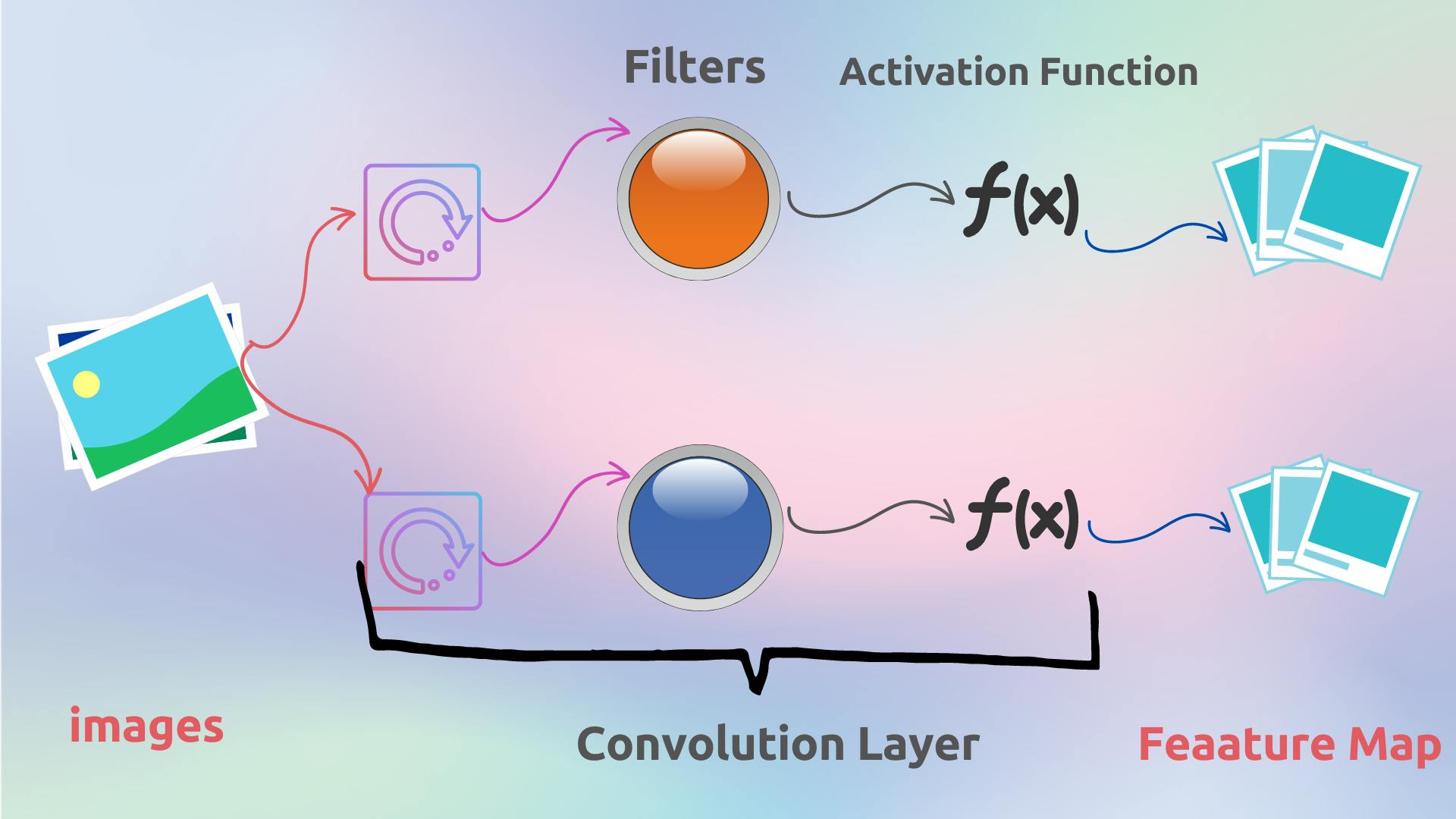

The convolutional layer generates feater maps from images , It contains filters (covolutional filters ) that converts images . Where the number of the feature map and the convolutional filter is the same . The filter of convolution layer are two dimentional matrices

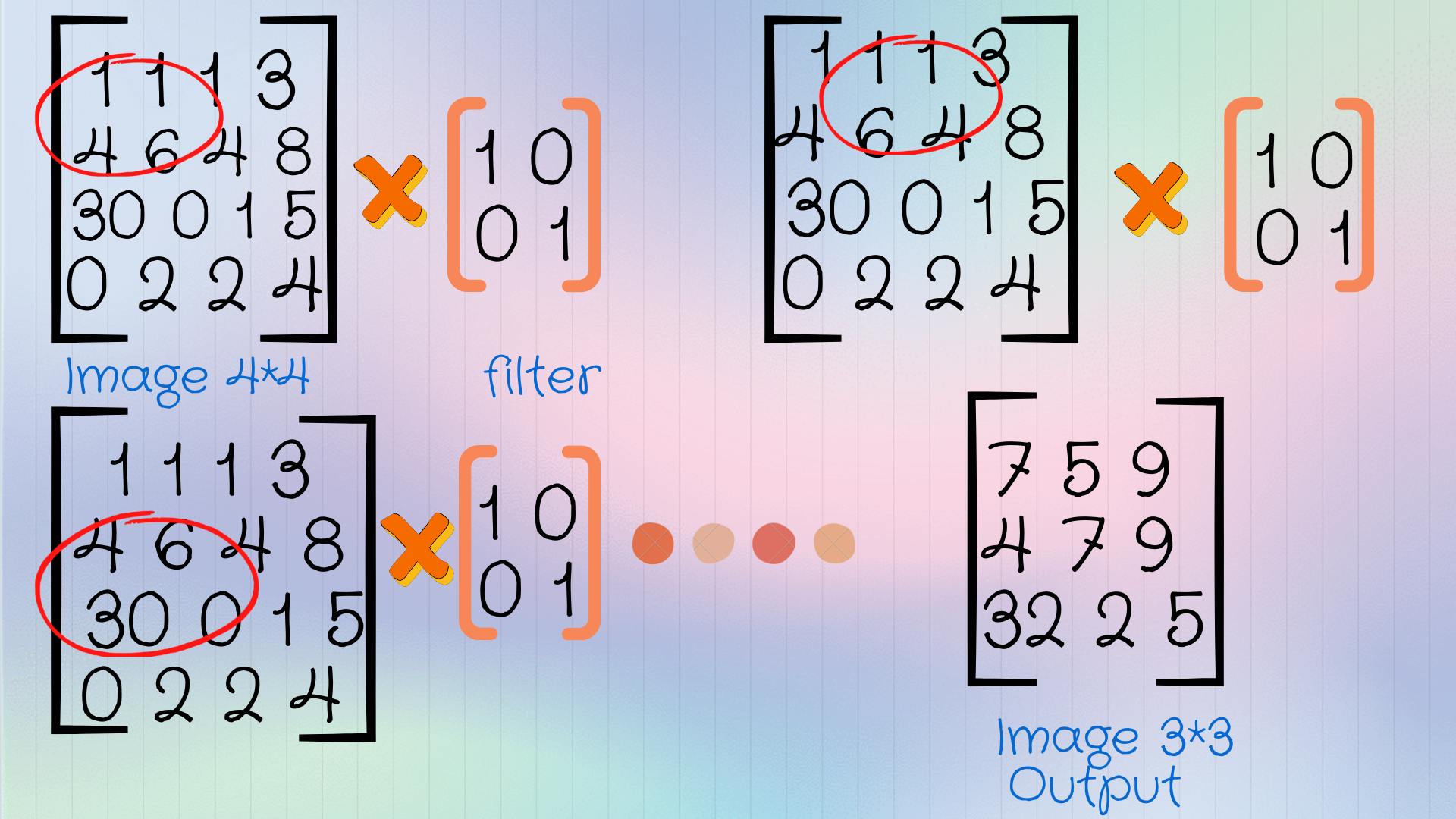

EXEMPLE

We have here 4*4 pixel image and one convolution filter

This concept starts by applying the upper left corner of the sub matrix

11+01+40+61 = 7

And the output of this addition presents the block (upper left corner) The next convolution will be applied to this block

11+10+06+14=5

This process will keep going and the final result is presented in the picture , So the 44 pixel image has been converted to 33 pixel image

Activation Functions And Pooling Layer

As we see in the picture there is another layer convolution filter and feature map These are the activation function They are the same as those we use in neural network

RELU: Rectified linear unit

Is the most popular activation function

Pooling Layer

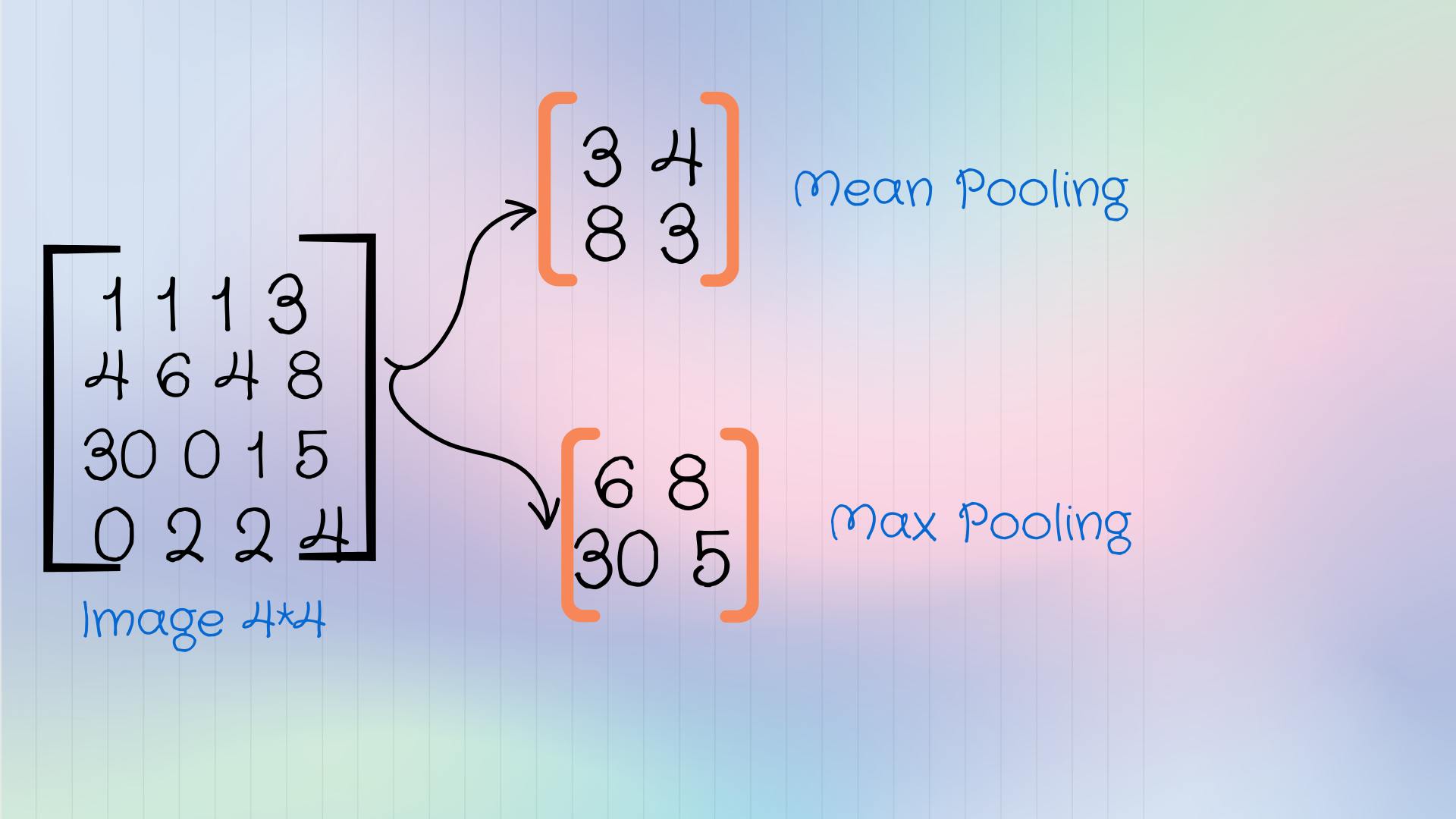

The task of the pooling layer is to reduce the size of the image

Its operation is very easy

There are two types of pooling

max pooling and mean pooling

The task of the pooling layer is to reduce the size of the image

Its operation is very easy

There are two types of pooling

max pooling and mean pooling

How to generate the mean pooling value

For the mean pooling, the convolution is done by taking the means of the convolution areas Exp : (1+1+4+6)/4=3 (1+3+4+8)/4= 4

In the same way for the next areas

How to generate max pooling value

We take the largest value of each of the convolution areas

conclusion

I first gathered this packet of information when I did my thesis, and this foundation has clarified and made my coding journey easy and meaningful good luck to everyone .